ChatGPT reportedly accused innocent man of murdering his children

It has been over two years since ChatGPT exploded onto the world stage and, while OpenAI has advanced it in many ways, there's still quite a few hurdles. One of the biggest issues: hallucinations, or stating false information as factual. Now, Austrian advocacy group Noyb has filed its second complaint against OpenAI for such hallucinations, naming a specific instance in which ChatGPT reportedly — and wrongly — stated that a Norwegian man was a murderer. To make matters, somehow, even worse, when this man asked ChatGPT what it knew about him, it reportedly stated that he was sentenced to 21 years in prison for killing two of his children and attempting to murder his third. The hallucination was also sprinkled with real information, including the number of children he had, their genders and the name of his home town. Noyb claims that this response put OpenAI in violation of GDPR. "The GDPR is clear. Personal data has to be accurate. And if it's not, users have the right to have it changed to reflect the truth," Noyb data protection lawyer Joakim Söderberg stated. "Showing ChatGPT users a tiny disclaimer that the chatbot can make mistakes clearly isn’t enough. You can’t just spread false information and in the end add a small disclaimer saying that everything you said may just not be true.." Other notable instances of ChatGPT's hallucinations include accusing one man of fraud and embezzlement, a court reporter of child abuse and a law professor of sexual harassment, as reported by multiple publications. Noyb first complaint to OpenAI about hallucinations, in April 2024, focused on a public figure's inaccurate birthdate (so not murder, but still inaccurate). OpenAI had rebuffed the complainant's request to erase or update their birthdate, claiming it couldn't change information already in the system, just block its use on certain prompts. ChatGPT replies on a disclaimer that it "can make mistakes." Yes, there is an adage something like, everyone makes mistakes, that's why they put erasers on pencils. But, when it comes to an incredibly popular AI-powered chatbot, does that logic really apply? We'll see if and how OpenAI responds to Noyb's latest complaint. This article originally appeared on Engadget at https://www.engadget.com/ai/chatgpt-reportedly-accused-innocent-man-of-murdering-his-children-120057654.html?src=rss

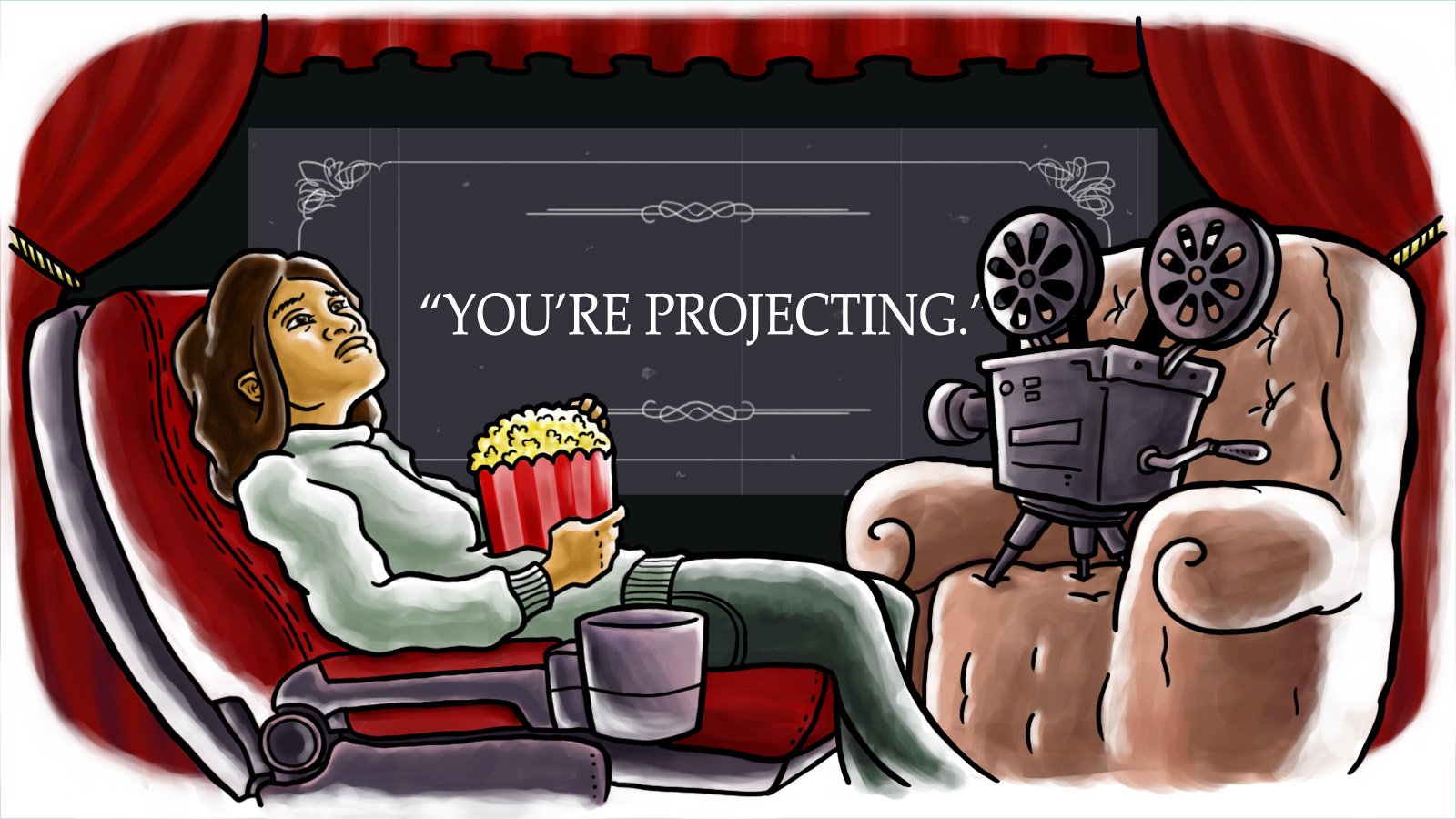

It has been over two years since ChatGPT exploded onto the world stage and, while OpenAI has advanced it in many ways, there's still quite a few hurdles. One of the biggest issues: hallucinations, or stating false information as factual. Now, Austrian advocacy group Noyb has filed its second complaint against OpenAI for such hallucinations, naming a specific instance in which ChatGPT reportedly — and wrongly — stated that a Norwegian man was a murderer.

To make matters, somehow, even worse, when this man asked ChatGPT what it knew about him, it reportedly stated that he was sentenced to 21 years in prison for killing two of his children and attempting to murder his third. The hallucination was also sprinkled with real information, including the number of children he had, their genders and the name of his home town.

Noyb claims that this response put OpenAI in violation of GDPR. "The GDPR is clear. Personal data has to be accurate. And if it's not, users have the right to have it changed to reflect the truth," Noyb data protection lawyer Joakim Söderberg stated. "Showing ChatGPT users a tiny disclaimer that the chatbot can make mistakes clearly isn’t enough. You can’t just spread false information and in the end add a small disclaimer saying that everything you said may just not be true.."

Other notable instances of ChatGPT's hallucinations include accusing one man of fraud and embezzlement, a court reporter of child abuse and a law professor of sexual harassment, as reported by multiple publications.

Noyb first complaint to OpenAI about hallucinations, in April 2024, focused on a public figure's inaccurate birthdate (so not murder, but still inaccurate). OpenAI had rebuffed the complainant's request to erase or update their birthdate, claiming it couldn't change information already in the system, just block its use on certain prompts. ChatGPT replies on a disclaimer that it "can make mistakes."

Yes, there is an adage something like, everyone makes mistakes, that's why they put erasers on pencils. But, when it comes to an incredibly popular AI-powered chatbot, does that logic really apply? We'll see if and how OpenAI responds to Noyb's latest complaint. This article originally appeared on Engadget at https://www.engadget.com/ai/chatgpt-reportedly-accused-innocent-man-of-murdering-his-children-120057654.html?src=rss

![First Dev Diary for ‘Cronos: The New Dawn’ Showcases Gameplay Mechanics and More [Video]](https://bloody-disgusting.com/wp-content/uploads/2025/03/cronos.jpg)

![‘FBC: Firebreak’ Coming in Summer 2025, New Gameplay Trailer Revealed [Watch]](https://bloody-disgusting.com/wp-content/uploads/2025/03/fbcfirebreak.jpg)

![Bloober Team and Skybound Entertainment Announce ‘I Hate This Place’ Game Adaptation [Trailer]](https://bloody-disgusting.com/wp-content/uploads/2025/03/ihatethisplace.jpg)

![‘System Shock 2: 25th Anniversary Remaster’ Infects PC and Consoles on June 26 [Trailer]](https://bloody-disgusting.com/wp-content/uploads/2025/03/sysshock2.jpg)

.png?format=1500w#)

![Arresting Images [THE BLOODY CHILD]](http://www.jonathanrosenbaum.net/wp-content/uploads/2010/12/the-bloody-child.jpg)

![‘Andor’: Tony Gilroy Teases More Romance, Season 2 Guests, Additional ‘Rogue One’ Characters & More [Interview]](https://cdn.theplaylist.net/wp-content/uploads/2025/03/20133148/tony-gilroy-andor-season-2.jpg)

![Elizabeth Olsen Says She’s Pitched A “Gnarly” White-Haired Wanda Returning To Marvel 50 Years Later [Exclusive]](https://cdn.theplaylist.net/wp-content/uploads/2025/03/20121325/Elisabeth-Olsen-WandaVision-Wanda-Scarlet-Witch.jpg)

![Delta Passenger Given Vomit-Covered Seat—Then The Flight Attendant Handled It In The Worst Place Possible [Roundup]](https://viewfromthewing.com/wp-content/uploads/2019/04/a321neogalley.jpg?#)

![Release: Rendering Ranger: R² [Rewind]](https://images-3.gog-statics.com/48a9164e1467b7da3bb4ce148b93c2f92cac99bdaa9f96b00268427e797fc455.jpg)

![[Podcast] Should Brands Get Political? The Risks & Rewards of Taking a Stand with Jeroen Reuven Bours](https://justcreative.com/wp-content/uploads/2025/03/jeroen-reuven-youtube-1.png)

![[Podcast] Should Brands Get Political? The Risks & Rewards of Taking a Stand with Jeroen Reuven](https://justcreative.com/wp-content/uploads/2025/03/jeroen-reuven-youtube.png)