Inside the AI Ground War Against Celebrity Deepfakes

"Digital bodyguard" firm Loti offers a glimpse into the daily battle to fight AI-generated content that misappropriates famous identities The post Inside the AI Ground War Against Celebrity Deepfakes appeared first on TheWrap.

In January, a French woman lost $900,000 after falling victim to scammers posing as Brad Pitt who convinced her to send money for medical treatments. The elaborate scheme, which lasted over a year, featured artificial intelligence-generated photos showing the actor in a hospital bed, supposedly battling kidney cancer. Pitt’s representatives later issued a statement expressing dismay that “scammers take advantage of fans’ strong connection with celebrities.”

These scams can also work at scale. This month, investigators uncovered an operation out of Tbilisi, Georgia, that used deepfakes and fabricated news reports to promote cryptocurrency schemes featuring well-known British personalities, including financial expert Martin Lewis, radio DJ Zoe Ball, and adventurer Ben Fogle. The operation defrauded thousands of victims of $35 million.

Welcome to the 24/7 reality confronting the world of celebrity and fame in these times, with no larger target than star-laden Hollywood.

While AI is the culprit in many of these cases, the weapons to fight that battle are being powered by AI as well, highlighting a new market opportunity for digital upstarts looking to be on the frontlines for studios and talent agencies.

Enter Loti, which calls itself a “likeness protection technology” firm that has a partnership with talent agency giant WME and secured $7 million in seed funding last fall. It is positioning itself as a solution to a growing problem: the proliferation of AI-generated content that misappropriates celebrities’ faces and voices without permission.

“Coming into this area, you can’t really fight technology without having technology in support of what you’re doing,” Chris Jacquemin, WME partner and head of digital strategy, told TheWrap last fall.

The size of the problem is staggering. According to DeepMedia, another company working on AI detection tools, approximately 500,000 audio and visual deepfakes were shared on social media in 2023. For the biggest stars, the impact can be severe. In January 2024, X blocked searches for Taylor Swift after pornographic deepfakes of the singer went viral on the platform.

Lawmakers are trying to pass laws that could create consequences for people making these nonconsensual images. U.S. Senators Chris Coons (D-Delaware), Amy Klobuchar (D-Minnesota), Marsha Blackburn (R-Tennessee) and Thom Tillis (R-North Carolina) plan to reintroduce the NO FAKES Act within weeks, according to CNN. This legislation joins the Take It Down Act, which targets AI-generated deep-fake pornography and recently gained support from First Lady Melania Trump.

“Everyone deserves the right to own and protect their voice and likeness, no matter if you’re Taylor Swift or anyone else,” Sen. Coons said when the NO FAKES Act was first introduced in 2024.

But even if the legislation passes, images will continue to get made, keeping companies like Loti busy. Luke Arrigoni, Loti’s chief executive, describes an ecosystem of unauthorized content that ranges from merely annoying to potentially devastating. The company employs a tiered approach to removal requests, recognizing that not all AI-generated media warrants the same response.

The most egregious category involves explicit material, which platforms typically remove promptly upon notification. Far more prevalent and challenging are impersonator accounts on social media platforms, which Arrigoni said can inflict substantial economic harm. For a single high-profile client, Loti might issue as many as 1,000 takedown requests in a day.

When it comes to fan-made content, Loti clients take a more nuanced approach. Some clients distinguish between harmful impersonation and benign creative content, leading to varying standards for what gets flagged for removal.

“There’s a sliding scale,” Arrigoni said. “I would say half of our clients don’t even care about the benign. If it’s not anything too crazy, they’ll say you don’t need to take it down.”

While Arrigoni declines to identify specific clients, he acknowledges that Loti serves individuals across entertainment, politics and corporate leadership, including military officials, politicians, chief executives, estate managers of deceased celebrities and numerous musicians, particularly in the country genre.

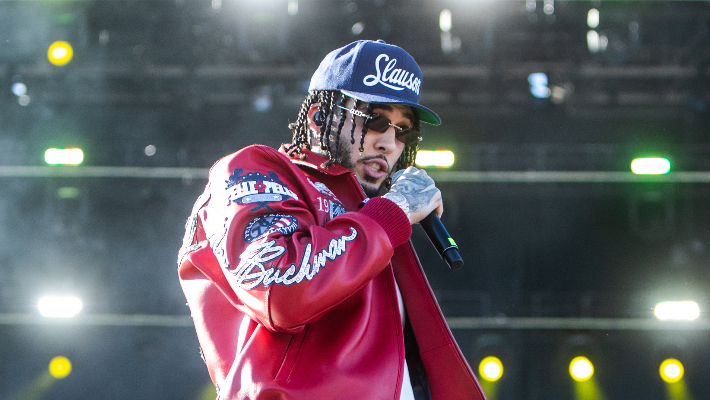

He hints at a roster that includes “a famously introverted adrenaline junkie with a penchant for long coats,” “Hollywood’s most trusted everyman,” “a podcast philosopher who moonlights as a combat sports enthusiast,” and “a hip-hop icon whose signature flow and laid-back delivery helped define the genre’s evolution.” The company also monitors accounts of associates connected to celebrities, such as managers or family members, who are frequently impersonated in sophisticated scams targeting fans.

100 million scans a day

The company’s approach represents a technological advancement over previous methods, in which unauthorized content was identified haphazardly by fan communities who would alert management teams. Those teams would then engage attorneys to negotiate removals — a process ill-suited to the volume and velocity of today’s digital environment.

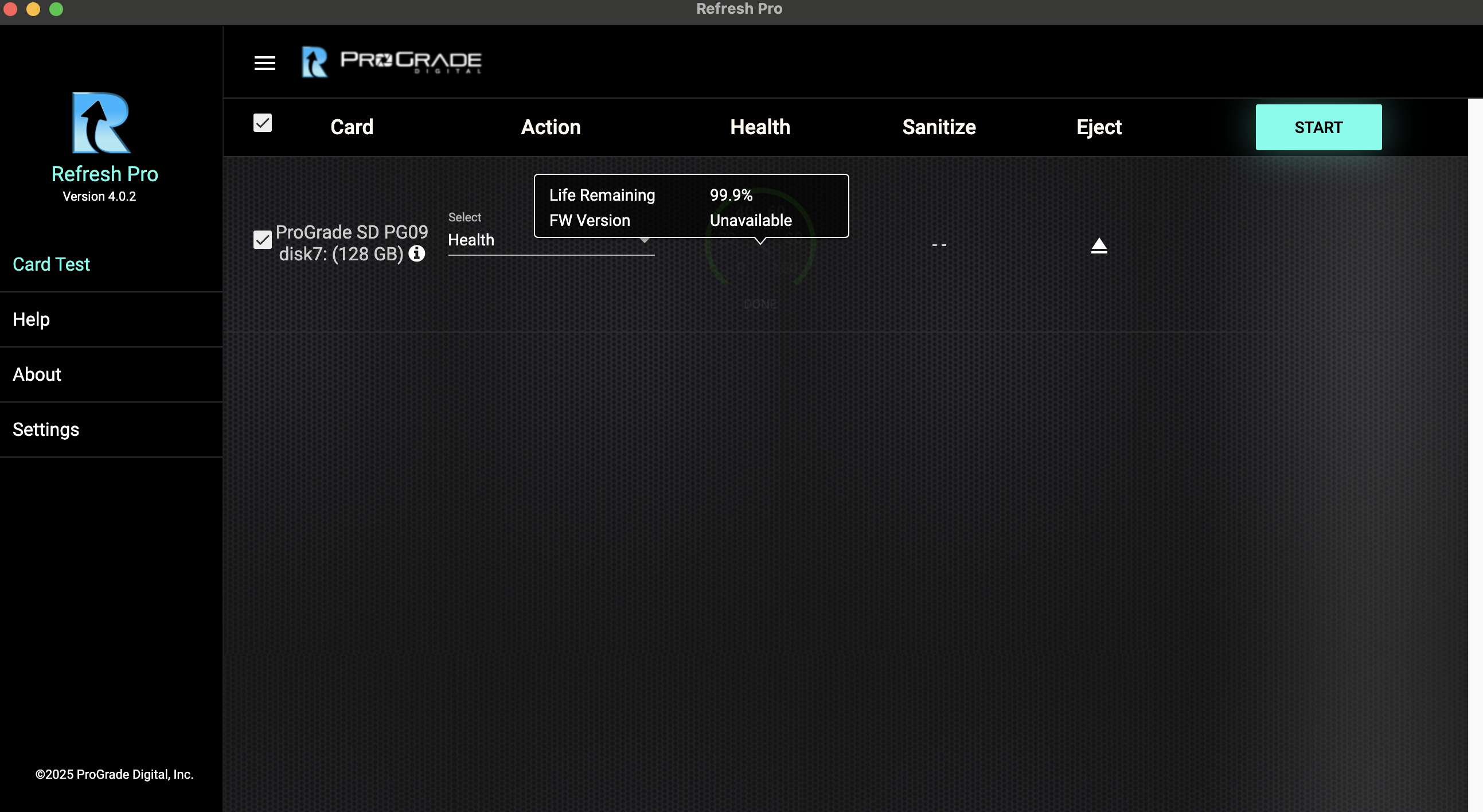

Loti’s system scans approximately 100 million images and videos daily, utilizing 40 different AI models across six services to identify unauthorized content. Their methods include face recognition, voice recognition and impersonation detection, with the company claiming a 95% success rate in removing offending material within 17 hours.

Rather than employing the traditional “fingerprinting” techniques that spot copyright violations but become ineffective when faces are altered or backgrounds changed, Loti’s technology actually becomes more precise as AI-generated content grows increasingly sophisticated.

“The better the technology gets, the more realistic it looks, the easier our stuff has to work to find it,” he said.

For a standard monthly fee of $2,500, clients receive unlimited takedown requests — a pricing model that deliberately avoids penalizing those who face disproportionate targeting, particularly women.

“These people are being victimized,” Arrigoni said. “We’re not trying to be war profiteers.”

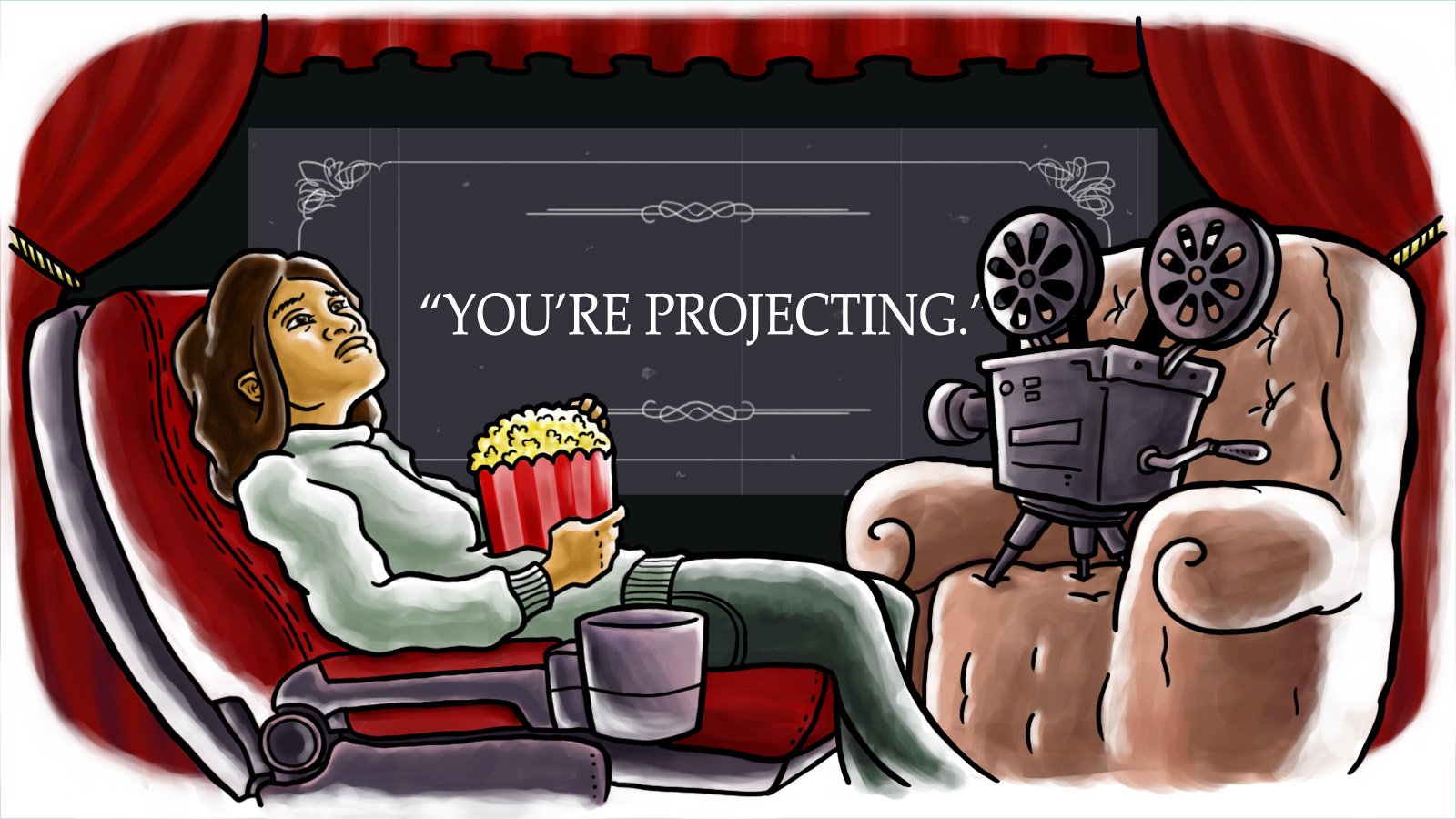

Arrigoni acknowledges a paradox inherent in Loti’s business model: utilizing AI to combat the harmful effects of the same technology.

“I’m selling golden pans and golden picks,” he said, referring to the gold rushes when there’s always a sub-industry making money on the picks and shovels. “It’s a bizarre irony, right?”

The company faces that irony head on when explaining their methodology to clients’ legal teams. The process requires sensitive permissions — scanning facial features and processing biometric data with AI models — which can raise concerns. Arrigoni said Loti addresses these by emphasizing strict limitations on how the data is used and offering contractual assurances that the company will never create generative models of their clients.

Nevertheless, he maintains that this approach represents a pragmatic and ethical application of tools that would otherwise be impossible to deploy through human effort alone.

Russia: out of bounds

While Loti’s technology may effectively counter AI threats across much of the internet, it cannot overcome geopolitical realities. Despite the company’s sophisticated tools and extensive reach, Arrigoni acknowledges that international borders still present barriers.

“We’re pretty clear with clients — Russia is out of bounds,” he said.

Russian-hosted sites typically ignore takedown requests. Loti issues removal notices across multiple layers of internet infrastructure — targeting not only websites but also domain registrars, content delivery networks, and even payment processors — but Russian platforms have developed independent alternatives to these services, rendering such approaches ineffective. Loti is, however, able to get social media posts linked to those sites taken down, which reduces their visibility and accessibility to the average user.

For public figures confronting a deluge of unauthorized digital representations — from explicit fabrications to fraudulent accounts targeting their supporters — such technology offers some recourse in an increasingly complex digital landscape. Many clients report that beyond the technical success, the service provides psychological relief from constantly monitoring their digital presence.

“We get consistent feedback that the problem set is getting smaller,” he said. “The problem is solved for people when they join with us. The mental space dedicated to this is gone.”

The post Inside the AI Ground War Against Celebrity Deepfakes appeared first on TheWrap.

![‘Eyes Never Wake’ Puts Your Webcam to Terrifying Use [Trailer]](https://bloody-disgusting.com/wp-content/uploads/2025/03/eyesneverwake.jpg)

![Official Announcement Trailer for ‘Baptiste’ Delves Into the Gameplay and Psychological Terror [Watch]](https://bloody-disgusting.com/wp-content/uploads/2025/03/baptiste.jpg)

![‘The Toxic Avenger’ Made an Appearance on the Green Chicago River for St. Patrick’s Day! [Video]](https://bloody-disgusting.com/wp-content/uploads/2025/03/Screenshot-2025-03-16-100033.png)

![Form Counts [MIX-UP, ANATOMY OF A RELATIONSHIP, & GAP-TOOTHED WOMEN]](https://jonathanrosenbaum.net/wp-content/uploads/2009/09/mix-up.jpg)

.jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

![[Podcast] Should Brands Get Political? The Risks & Rewards of Taking a Stand with Jeroen Reuven](https://justcreative.com/wp-content/uploads/2025/03/jeroen-reuven-youtube.png)